Our primary product is a ChatGPT website chatbot. One of its unique features is that we’re able to scrape your website to create context data for a ChatGPT chatbot. However, when I say unique, there are 1,000+ similar products out there doing similar things. We’ve got one unique difference though, which is that we simply cannot afford to “say no” …

What I mean by that is that instead of just saying “it doesn’t work” when confronted with a website we couldn’t crawl, I have manually crawled and debugged these websites, seeing such errors as an opportunity to improve our crawler – Which is probably why we’ve got a kick ass crawler able to literally digest almost anything you throw at it today. As far as I know, I think we’re the only vendor able to for instance crawl a website without a sitemap.

My findings

First of all, the amount of things that can go wrong during website scraping is insane. Not because there are flaws with the crawler, but because people have some really, really weird websites, with so many “interesting” bugs and errors, it’s almost pussling to me that their websites works at all. Of course, for me being a “natural born geek” saying such things is easy, while for most people a website isn’t even close to what their primary business is based around, and more like a “bonus thing” they don’t really care that much about – So I don’t pass judgment here in any ways. I can only assume my car mechanic would say similar things if he looked under the hood of my car …

However, getting to look behind the hoods in such detail as I have done over the last months, gives me a unique insight into what’s actually out there. And below are some of the issues I’ve found.

- Empty robots.txt files still returning success 200 from the web server

- Empty sitemap files

- Sitemap files served as HTML Content-Type

- Robots.txt files referencing the landing page as its sitemap, obviously returning HTML once retrieved, regardless of how you massage your Accept header.

- Entire websites without as much as a single Hx element or paragraph, entirely built using DIV elements – Seriously!

- … and a lot of websites aren’t actually websites but actually SPA apps

A week ago I wanted to write a blog about our RTL feature, and I figured “Hey, let’s find some Quran website, and create a ChatGPT website chatbot wrapping the Quran”. I checked out dozens of websites, and I literally could not find a single Quran related website that contained as much as a single Hx or paragraph element! 😜

I had to give up, I couldn’t find a single Quran website I could intelligently crawl with our technology. Implying, you could probably create a company doing nothing but creating Quran websites, and you’d probably never run out of clients wanting to improve their site’s SEO and structure!

I could go on for hours listing weird findings, but you get the point. What I’m coming to, is that crawling and scraping a website is not for the faint at heart, it’s pure rocket science. If you create a crawler and try it on your own website, and it works, because your site is built by somebody who knows web standards – Congratulation, you’ve created a website crawler that can handle maximum 10% of the websites out there would be my guess. Literally 90% of all the websites in the world suffers from one or more severe bug, making them almost impossible to crawl. At least the websites I have seen these last months are suffering here …

My point

I used to laugh of web design companies providing web design as a service. My assumption was that creating websites was a “solved problem”, and that you couldn’t really find many customers willing to pay for such services, since everybody already have a working website. After having spent months crawling websites, I am not so sure anymore. If you want to have a kick ass business idea, implement a website validator, such as SEOBility, and couple it with web design services, providing you with leads, giving your leads insight into your report. Then create an automatically generated report providing details about what’s wrong with the website, and use these arguments as a sell in to gather clients. Once website owners can actually see how many wrongs there are with their websites, I assume they would be much more willing to pay for re-designing and re-creating their website.

In fact, it could be argued that we are perfectly positioned for such a thing, having built a kick ass website crawler, with the intention of generating context data for our ChatGPT website chatbot technology, ending up with insights into website quality to an extent difficult to reproduce for others.

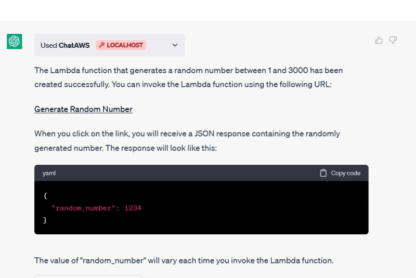

And here’s the point. Try out our product and create yourself a ChatGPT website chatbot in 5 minutes. Why? Because the quality of your chatbot will reveal a lot of information about your website’s quality. If it fails because your website is riddled with bugs, you know your site is crap, and you need to create a new one.

Then realise that we’re in the process of “white labeling” the above “Create a ChatGPT website chatbot” form, allowing web design companies to implement it on their own websites, as a service to clients and leads, providing them with valuable insights into the quality of their clients’ and leads’ websites, giving them arguments they can use to generate more customers for their primary business – Which is creating website – In addition to having an additional source of revenue being revenue share on the chatbot product themselves. Then realise, I’m talking about subscription based services, providing you with monthly recurring revenue for the hosting of the chatbot itself.

Now all I’ve got to do is to figure out how to generate an automatic report, sending it to the partner’s lead generation email address, with a detailed description of what’s wrong with the website, to provide arguments for creating a new one – And catching! Laughing all the way to the bank …

… give me another week … 😁