Do you apply Apache Kafka or RabbitMQ in your software project? If so, then you definitely have some message schemas. Have you ever encountered in backward compatibility issue? An accidental message format changing and your entire system is no longer functioning? I bet you have such an unpleasant experience at least once in your career.

Thankfully, there is a solution. In this article, I’m telling you:

- Why backward compatibility is crucial in message driven systems?

- How can you validate the backward compatibility automatically with simple unit tests in Java?

- A few words about forward compatibility.

You can find code examples and the entire project setup of unit testing backward compatibility in this repository.

Why backward compatibility matters?

Suppose there are two services. The Order-Service transfers a message about shipment details to the Shipment-Service. Look at the schema below.

The Shipment-Service (the consumer) defines the message schema (i.e. the contract) with two fields: orderId and address. And the Order-Service (the producer) sends the message according to the specified schema.

Everything works well. But suddenly the new requirement came into play. Now we have to set the complex address instead of just putting the city name (e.g. country, city, postcode, etc.). No problem, we just need to update the contract accordingly. Look at the fixed schema below.

{

"orderId": Long

"address": {

"country": String,

"city": String,

"postcode": Integer

}

}

Now there are two likely outcomes:

- The producer had updated the contract before the consumer did.

- Vice versa.

Assuming that the producer is the first one to make changes. What happens in such situation? Look at the diagram below to understand the consequences.

Here is what happens:

- The

Order-Serviceupdates the contract. - The

Order-Servicesends the message with the new format. - The

Shipment-Servicetries to deserialize the received message with the old contract. - Deserialization process fails.

But what if the Shipment-Service updates the contract firstly? Let’s discover this scenario on the schema below.

The problem remains the same. As a matter of fact, the Shipment-Service cannot deserialize received message.

So, we can conclude that altering the message schema has to be backward compatible.

Some of you may ask whether is it possible to update the

Shipment-Serviceand theOrder-Servicecontracts simultaneously or not? That would eliminate the problem with the backward compatibility, right? Well, it’s unbearable to perform such update in real life. Besides, even if you did, there’s a possibility that the broker (e.g. Apache Kafka) still contains the messages with the old format that you need to process somehow. So, backward compatibility is always a concern.

The idea of unit testing backward compatibility

Let’s get to the code. I’m using Java Jackson for de/serialization. But the idea remains the same for any other library.

Look at the first version of OrderCreated message schema below.

@Data

@Builder

@Jacksonized

public class OrderCreated {

private final Long orderId;

private final String address;

public OrderCreated(Long orderId, String address) {

this.orderId = notNull(orderId, "Order id cannot be null");

this.address = notNull(address, "Address cannot be null");

}

}

The Jacksonized annotation tells Jackson to use the builder generated by the Builder annotation as the deserialization entry point. Quite useful because you don’t have to repeat @JsonCreator and @JsonProperty usage while dealing with immutable classes.

How do we start with the backward compatibility check? Let’s add the message example to the resources/backward-compatibility directory. Look at the Json message example and the folder structure below.

{

"orderId": 123,

"address": "NYC"

}

Each schema altering should come up with adding a new file to the backward-compatibility directory. And the content should describe the increment made to the schema.

Each new file name should be lexically greater than the previous one. In that case, the tests run will be deterministic. The easiest way is just setting the incrementing natural number. But what if you need something more complicated? Then I recommend you to read my article about Flyway migrations naming strategy in a big project. I describe there the similar principle.

So, there are two rules we have to follow:

- Each new schema update should come up with creating a new file in

resources/backward-compatibilitydirectory. - You should never delete the previously added files to ensure backward compatibility consistency.

Anyway, there are cases when you want to break the backward compatibility. For example, no one uses this field anymore and you just need to eliminate it. In that case, you can remove some backward compatibility data files. However, don’t treat it as a regular situation. It’s an exception but not a valid common scenario.

The automation process

Now we need the test that reads the described files and generates the validation cases. Look at the code example below:

class BackwardCompatibilityTest {

private final ObjectMapper objectMapper = new ObjectMapper();

@ParameterizedTest

@SneakyThrows

void shouldRemainBackwardCompatibility(String filename, String json) {

final var orderCreated = assertDoesNotThrow(

() -> objectMapper.readValue(json, OrderCreated.class),

"Couldn't parse OrderCreated for filename: " + filename

);

final var serializedJson = assertDoesNotThrow(

() -> objectMapper.writeValueAsString(orderCreated),

"Couldn't serialize OrderCreated to JSON from filename: " + filename

);

final var expected = new JSONObject(json);

final var actual = new JSONObject(serializedJson);

JSONAssert.assertEquals(

expected,

actual,

false

);

}

}

I parametrised the backward compatibility test because the number of files will grow (therefore, the true number of test cases as well). Here is what happens step by step:

- The test receives the json content and the latest combined filename (I describe the algorithm later in the article). We the latter parameter for a more informative assertion message in case of failures.

- Then we try to parse the supplied json to the

OrderCreatedobject. If this step fails, then we definitely have broken the backward compatibility. - Afterwards, we serialize the parsed

OrderCreatedobject back to json. Usually, this operation doesn’t fail. Anyway, we should always be prepared for a dangerous scenario. - And finally, we check that the supplied json equals to the one we got on step 3 (I use JSONAssert library here). The

falseboolean parameter tells to check only overlapping fields. For example, if theactualresult contains theaddressV2field and theexpectedobject doesn’t, it won’t trigger the failure. That’s a normal situation because backward compatibility data is static while theOrderCreatedmight grow with new parameters.

It’s time to provide the input parameters to the shouldRemainBackwardCompatibility test. Look at the code example below.

private static Stream<Arguments> provideMessagesExamples() {

final var resourceFolder = Thread.currentThread().getContextClassLoader().getResources("backward-compatibility").nextElement();

final var fileInfos = Files.walk(Path.of(resourceFolder.toURI()))

.filter(path -> path.toFile().isFile())

.sorted(comparing(path -> path.getFileName().toString()))

.map(file -> new FileInfo(

file.getFileName().toString(),

Files.readString(file))

)

.toList();

...

}

private record FileInfo(String name, String content) {}

In the beginning, we read all the files from the backward-compatibility directory, sort them by their names, and assign the result tuples list [(filename, content), …] to the fileInfos variable. Look at the next step below.

final var argumentsList = new ArrayList<Arguments>();

for (int i = 0; i < fileInfos.size(); i++) {

JSONObject initialJson = null;

for (int j = 0; j <= i; j++) {

if (j == 0) {

initialJson = new JSONObject(fileInfos.get(i).content);

}

final var curr = fileInfos.get(j);

deepMerge(new JSONObject(curr.content), initialJson);

if (j == i) {

argumentsList.add(Arguments.arguments(curr.name, initialJson.toString()));

}

}

}

return argumentsList.stream();

And here comes the algorithm of creating the backward compatibility data list itself. Suppose we have 3 files: 1.json, 2.json and 3.json. Therefore, you should validate these combinations to ensure that newly added changes haven’t broken the backward compatibility:

- The content of the

1.json - The content of the

1.json+2.json(the latter one rewrites existing fields). - The content of the

1.json+2.json+3.json(the latter one rewrites existing fields).

The loop above does this by calling the deepMerge method. The first parameter is the file with the current index in the loop. And the initialJson values are the target to merge all the latter changes.

Look at the deepMerge method declaration below.

private static void deepMerge(JSONObject source, JSONObject target) {

final var names = requireNonNullElse(source.names(), new JSONArray());

for (int i = 0; i < names.length(); i++) {

final String key = (String) names.get(i);

Object value = source.get(key);

if (!target.has(key)) {

// new value for "key":

target.put(key, value);

} else {

// existing value for "key" - recursively deep merge:

if (value instanceof JSONObject valueJson) {

deepMerge(valueJson, target.getJSONObject(key));

} else {

target.put(key, value);

}

}

}

}

I didn’t write this code example by myself but took it from this StackOverflow question.

There are two arguments: source and target. The source is the one to read new values. And the target is the JSONObject to put them or replace existing ones.

Finally, to supply the computed data set just add the @MethodSource annotation to the shouldRemainBackwardCompatibility test method. Look at the code example below.

@ParameterizedTest

@MethodSource("provideMessagesExamples")

void shouldRemainBackwardCompatibility(String filename, String json) { ... }

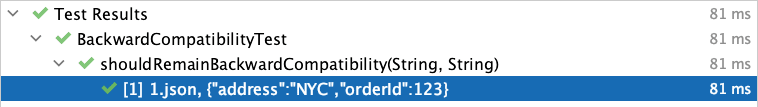

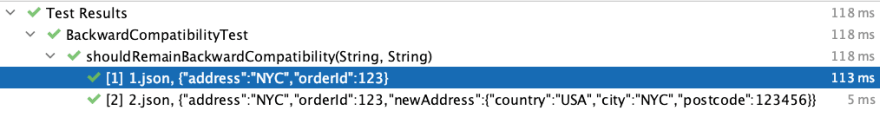

Here is the test run result.

As you can see, an example with a single file works as expected.

Evolving data schema

Let’s get to the initial point of the article. There is an address field of String type and we want to store city, country, and postcode separately. As we already discussed, we cannot just replace the existing field with the new value type. Therefore, we should add the new field and mark the previous one as deprecating. Look at the updated version of OrderCreated class below:

@Data

@Builder

@Jacksonized

public class OrderCreated {

private final Long orderId;

@Deprecated(forRemoval = true)

private final String address;

private final Address newAddress;

public OrderCreated(Long orderId, String address, Address newAddress) {

this.orderId = notNull(orderId, "Order id cannot be null");

this.address = notNull(address, "Address cannot be null");

this.newAddress = notNull(newAddress, "Complex address cannot be null");

}

@Builder

@Data

@Jacksonized

public static class Address {

private final String city;

private final String country;

private final Integer postcode;

public Address(String city, String country, Integer postcode) {

this.city = notNull(city, "City cannot be null");

this.country = notNull(country, "Country cannot be null");

this.postcode = notNull(postcode, "Postcode cannot be null");

}

}

}

I deprecated the address field and added the newAddress field that is a complex object containing country, city, and postcode. All right, now we need to add the new 2.json file with the example of filled newAddress. Look at the code snippet below.

{

"newAddress": {

"city": "NYC",

"country": "USA",

"postcode": 123456

}

}

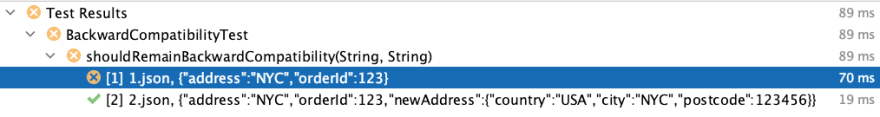

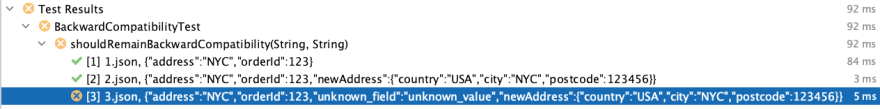

Let’s run the test to check the backward compatibility.

We did break the backward compatibility! Why is that? Because we marked the newAddress field as mandatory and checked it for non-nullability. No surprise that the 1.json content failed the test because it has no mention of the newAddress field.

So, here comes an important conclusion. If you add a new field to the existing schema, you cannot check it for non-nullability. There is no guarantee that the producer will start sending messages with the newly stated field instantly. Meaning that the solution is the default values usage. Look at the fixed OrderCreated class declaration below.

@Data

@Builder

@Jacksonized

public class OrderCreated {

private final Long orderId;

@Deprecated(forRemoval = true)

private final String address;

private final Address newAddress;

public OrderCreated(Long orderId, String address, Address newAddress) {

this.orderId = notNull(orderId, "Order id cannot be null");

this.address = notNull(address, "Address cannot be null");

this.newAddress = requireNonNullElse(newAddress, Address.builder().build());

}

@Builder

@Data

@Jacksonized

public static class Address {

@Builder.Default

private final String city = "";

@Builder.Default

private final String country = "";

@Builder.Default

private final Integer postcode = null;

}

}

We don’t want to deal with null value. That’s why we assign Address.builder().build() instance, if the supplied newAddress equals to null (which basically means it’s not present in the provided json).

The

@Builder.DefaultLombok annotation does the same thing as therequireNonNullElsefunction but without specifying the constructor manually.

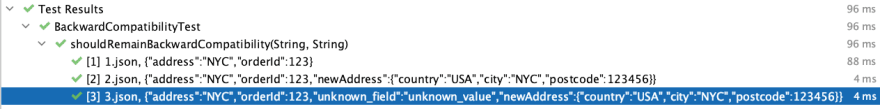

Let’s run the backward compatibility test again.

Now we’re ready. The new field presence has not broken the backward compatibility and we can update the contracts safely on both sided in any order.

A few words about forward compatibility

I’ve seen that developers tend to care about forward compatibility much less than the backward one. Though it’s crucial as well.

I’ve covered the topic of forward compatible enums in this article. If you’re dealing with message driven systems, I strongly recommend you to check it out.

What if somebody put additional properties that aren’t present in the message schema? Look at the example below:

{

"orderId": 123,

"address": "NYC",

"newAddress": {

"city": "NYC",

"country": "USA",

"postcode": 123456

},

"unknown_field": "unknown_value"

}

Nothing should break, right? Anyway, there is no field to map the value. However, let’s add the backward compatibility test to ensure consistency. Look at the new 3.json file declaration below.

{

"unknown_field": "unknown_value"

}

Let’s run the test to see the results.

Wow, something went wrong. Look at the output message below.

Caused by: UnrecognizedPropertyException: Unrecognized field "unknown_field"

By default, Jackson treats unknown property presence as an error. Sometimes that can be really annoying. Especially if there are many producers (and some may not be the part of your project). Thankfully, the fix is a piece of cake. You just need to add JsonIgnoreProperties annotation with the proper value. Look at the code snippet below.

@Data

@Builder

@Jacksonized

@JsonIgnoreProperties(ignoreUnknown = true)

public class OrderCreated { ... }

Let’s run the test again.

It still fails! Though the message is different.

Expected: unknown_field

but none found

The assertion checks that the input and the serialized json values are equal. But in this situation, that’s not the case. The input json contains the unknown_field. But the @JsonIgnoreProperties(ignoreUnknown = true) annotation presence just erases it. So, we lost the value.

Again, this might be acceptable under particular circumstances. But what if you still want to guarantee that we won’t lose the user provided fields even if there is no business logic on these values? For example, your service might act as a middleware that consumes messages from a Kafka topic and produces them to another one.

Jackson has a solution for this case as well. There is JsonAnySetter and JsonAnyGetter annotations that helps to store unknown values and serialize them lately. Look at the final version of OrderCreated class declaration below.

@Data

@Builder

@Jacksonized

@JsonIgnoreProperties(ignoreUnknown = true)

public class OrderCreated {

private final Long orderId;

@Deprecated(forRemoval = true)

private final String address;

private final Address newAddress;

@JsonAnySetter

@Singular("any")

private final Map<String, Object> additionalProperties;

@JsonAnyGetter

Map<String, Object> getAdditionalProperties() {

return additionalProperties;

}

public OrderCreated(Long orderId, String address, Address newAddress, Map<String, Object> additionalProperties) {

this.orderId = notNull(orderId, "Order id cannot be null");

this.address = notNull(address, "Address cannot be null");

this.newAddress = requireNonNullElse(newAddress, Address.builder().build());

this.additionalProperties = requireNonNullElse(additionalProperties, emptyMap());

}

@Builder

@Data

@Jacksonized

public static class Address {

@Builder.Default

private final String city = "";

@Builder.Default

private final String country = "";

@Builder.Default

private final Integer postcode = null;

}

}

I marked the

getAdditionalPropertiesas package-private so the users of this class cannot access the unknown properties values.

Let’s run the backward compatibility test again.

Everything works like a charm now. The morale is that forward compatibility might be as important as backward one. And if you want to maintain it, then make sure that you don’t simply erase unknown values but transmit them further to be serialized in the expected json.

Conclusion

That’s all I wanted to tell you about backward (and a bit of forward) compatibility. Hope this knowledge will be useful to keep your contracts consistent to avoid unexpected deserialization errors on consumer side.

If you have questions or suggestions, leave your comments down below. Thanks for reading!

Resources

- The repository with the whole setup of unit testing backward compatibility

- Apache Kafka

- RabbitMQ

- Java Jackson library

- Jacksonized Lombok annotation

- Builder Lombok annotation

- JsonCreator and JsonProperty Jackson annotations

- My article about Flyway migrations naming strategy in a big project

- JSONAssert library

- StackOverflow question with example of merging two JSON objects

- My article about forward compatible enum values in API with Java Jackson

- JsonIgnoreProperties Jackson annotation

- JsonAnySetter and JsonAnyGetter Jackson annotations