Microservice architecture is famous for its resilience and ability to self-recover. A health check pattern (e. g. Kubernetes — readiness, liveness, startup probes) is one of the patterns which actually makes it happen. The topic of how to set up health check probes is well covered but there is not much about what to check in the probe and how to understand if a dependency is critical for a particular service.

Topics to cover

- How a health check pattern works.

- What to check when service is under the load.

- What to check on the initial setup.

How a health check pattern works

Before I can explain the health check pattern, first we need to understand how the load balancing works. Every microservice has multiple instances (or replicas) to provide redundancy and reduce the load on a single instance. So whenever a particular instance of microservice A wants to make an API call to microservice B, it needs to choose a particular instance of microservice B. But how to choose one? The Load Balancer (LB) helps to make this decision.

Load balancers keep track of all the healthy instances of microservices and spread the load between them (see load balance strategies). But because instances of services in a microservices architecture are usually ephemeral, the list of healthy instances constantly gets updated. It happens when a new rollout happens: new instances get added and old ones get removed. But the problem is that by the time the instances got into the list of LB, it’s possible that the instance is not ready to handle requests yet (for example the cached is not warmed up or connections to the database are not yet established). So this is a point where a health check pattern helps a LB to understand whether a service instance is ready or still capable of handling the traffic.

How does the load balancer do this? It makes a request to a health check endpoint for each instance of the microservice, and if the service answers that it is ready for a pre-configured number of times (to avoid jitter), it gets traffic. That, in a nutshell, is what a health check pattern is.

But what microservice should check in these health check endpoints? It depends on two scenarios:

- Service has production traffic.

- Service is at the initial startup.

Usually you can see that you need to check all critical dependencies. But what are these critical dependencies? Well, a service that serves synchronous requests usually has a combination from this list of dependencies:

- Persistent database;

- Cache database;

- Message broker;

- Other services;

- Static storage.

But should you actually check all of them during a health check endpoint call? Let’s look at a few scenarios. Assume we have a service with a persistent database (which handles 5% of writes) and a cache database (which serves 95% of reads). What are the pros and cons of checking both dependencies or just the cache? Let’s explore three scenarios:

Scenario 1. Instance #3 stops receiving production traffic if it has no access to the database or cache, or both.

Whenever an instance of the service fails to reach out any of the dependencies, health check fails, so the LB stops sending the traffic to that instance. Seems to be a safe option doesn’t it? Let’s look at the other scenarios:

Scenario 2. All instances stop receiving production traffic because they lose the access to the database

Here our critical dependency fails, so all the instances of our service also start failing because none of the health checks are going through. Now our configuration is not looking good: to make sure that our 5% of writes are surely served when the database is healthy and reachable in case an instance fails, we sacrifice 95% of reads in case of DB failures.

Let’s say that our SLA for 95% of reads is more important and much stricter. In that case, we just put the cache in the health check:

Scenario 3. All instances keep receiving traffic but 5% of writes fail

In the case when the database fails, our health check still returns ok. This means that all instances still serve 95% of reads and keep failing user requests for 5% of writes, which is much better for SLA of read requests and worse for writes.

When the cache fails though, we are also in pretty good shape here. From SLA or client’s perspective it probably doesn’t matter if a read request fails because of an instance of service unavailability or service responds with an error of “cache is unavailable”. Of course, there are two edge cases:

- For the first one, theoretically, we could’ve service read from the database but in this case, the presence of cache is under question.

- The second one is less obvious: if you allow writes when the cache is unavailable. The cache gets inconsistent and at this point it gets useless…

As you can see, choosing what critical dependencies to check in a health check entirely depends on your SLA and distributions of traffic. I hope that the case above gives you an idea of how to choose what to check…

The situation when you have an initial startup of your service is slightly different from the situation above. The main difference is that this check happens only once. So the goal of a startup check is to ensure that the service has the right configuration for all its critical dependencies. And before we purely produce traffic, we make sure that the service is capable of doing so. In the latter case, I am referring to proactively establishing all required connections and making a pre-warm-up.

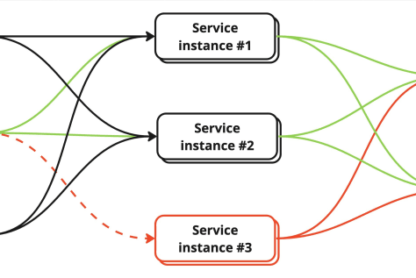

The one thing which is not recommended though is to check the other critical services. Because this could fail your rollout, and trigger the chain of calls that will likely fail. Imagine every service checking every other service, and those other services checking their dependencies. So a single rollout of one instance of service could easily trigger hundreds of requests and a few of them will almost certainly fail. Below you can see a picture of how the probability grows:

As you might see from the article, there are a few cases you have to watch out for to choose what dependencies to check in the health checks:

When a service has production traffic:

- The choice of critical dependencies depends on your SLA requirements and traffic distribution.

- Usually it’s an anti-pattern to check other services as it causes “chatty traffic”.

However when at initial startup it’s better to check:

- If your service can access all critical dependencies like database and cache.

- And as before not recommended to check other services because the rollout could fail due to failure of its dependencies.