What are screen sharing APIs?

Screen sharing has become an essential part of many web applications as it enables remote collaboration and support. While most developers are familiar with the standard WebRTC APIs for screen sharing, there are other lesser-known APIs that offer additional functionality and control. In this blog post, we’ll explore four of these screen sharing APIs and provide code snippets to help you get started. Although these APIs are limited to Google Chrome for now, they certainly are extremely fun to play around with!

Region Capture API

The Region Capture API enables you to capture only a portion of the user’s screen, rather than the entire screen. This can be useful if you want to capture a specific region such as a particular application window or a section of a webpage. Here’s an example of how to use the Region Capture API:

You define a CropTarget in your web app by calling CropTarget.fromElement() with the element of your choice as input.

// In the main web app, associate mainContentArea with a new CropTarget

const mainContentArea = document.querySelector("#mainContentArea");

const cropTarget = await CropTarget.fromElement(mainContentArea)';

You pass the CropTarget to the video conferencing web app.

// Send the CropTarget to the video conferencing web app.

const iframe = document.querySelector("#videoConferenceIframe");

iframe.contentWindow.postMessage(cropTarget);

The video conferencing web app asks the browser to crop the track to the area defined by CropTarget by calling cropTo() on the self-capture video track with the crop target received from the main web app.

// Ask the user for permission

// to start capturing the current tab.

const stream = await navigator.mediaDevices.getDisplayMedia({

preferCurrentTab: true,

});

const [track] = stream.getVideoTracks();

// Start cropping the self-capture video track using the CropTarget

// received over window.onmessage.

await track.cropTo(cropTarget);

// Enjoy! Transmit remotely the cropped video track with RTCPeerConnection.

A really cool privacy feature coming soon is cropping in three dimensions (With a z-index):

Occluding and occluded content

For Region Capture, only the position and size of the target matter, not the z-index. Pixels occluding the target will be captured, and occluded parts of the target will not.

This is a corollary of Region Capture being essentially cropping. One alternative, which will be its own future API, is Element-level Capture, that is, capture only pixels associated with the target, regardless of occlusions. Such an API has a different set of security and privacy requirements than simple cropping.

Google Chrome’s Sample

Capture Handle API

The Capture Handle API provides a way for the capturing webpage to communicate with the captured webpage. It makes things like navigating between slides using controls on the video call itself possible rather than continuously having to switch between the video call and the presentation webpage.

Captured side

Web apps can expose information to would-be capturing web apps. It does so by calling navigator.mediaDevices.setCaptureHandleConfig() with an optional object consisting of these members:

-

handle: Can be any string up to 1024 characters. -

exposeOrigin: Iftrue, the origin of the captured web app may be exposed to capturing web apps. -

permittedOrigins: Valid values are (i) an empty array, (ii) an array with the single item"*", or (iii) an array of origins. IfpermittedOriginsconsists of the single item"*", thenCaptureHandleis observable by all capturing web apps. Otherwise, it is observable only to capturing web apps whose origin is inpermittedOrigins.

The following example shows how to expose a randomly generated UUID as a handle and the origin to any capturing web app.

const config = {

handle: crypto.randomUUID(),

exposeOrigin: true,

permittedOrigins: ['*'],

};

navigator.mediaDevices.setCaptureHandleConfig(config);

Capturing side

The capturing web app holds a video MediaStreamTrack, and can read the capture handle information by calling getCaptureHandle() on that MediaStreamTrack. This call returns null if no capture handle is available, or if the capturing web app is not permitted to read it. If a capture handle is available, and the capturing web app is added to permittedOrigins, this call returns an object with the following members:

-

handle: The string value set by the captured web app withnavigator.mediaDevices.setCaptureHandleConfig(). -

origin: The origin of the captured web app ifexposeOriginwas set totrue. Otherwise, it is not defined.

The following example shows how to read the capture handle information from a video track.

// Prompt the user to capture their display (screen, window, tab).

const stream = await navigator.mediaDevices.getDisplayMedia();

// Check if the video track is exposing information.

const [videoTrack] = stream.getVideoTracks();

const captureHandle = videoTrack.getCaptureHandle();

if (captureHandle) {

// Use captureHandle.origin and captureHandle.handle...

}

Monitor CaptureHandle changes by listening to "capturehandlechange" events on a MediaStreamTrack object. Changes happen when:

- The captured web app calls

navigator.mediaDevices.setCaptureHandleConfig(). - A cross-document navigation occurs in the captured web app.

videoTrack.addEventListener('capturehandlechange', event => {

captureHandle = event.target.getCaptureHandle();

// Consume new capture handle...

});

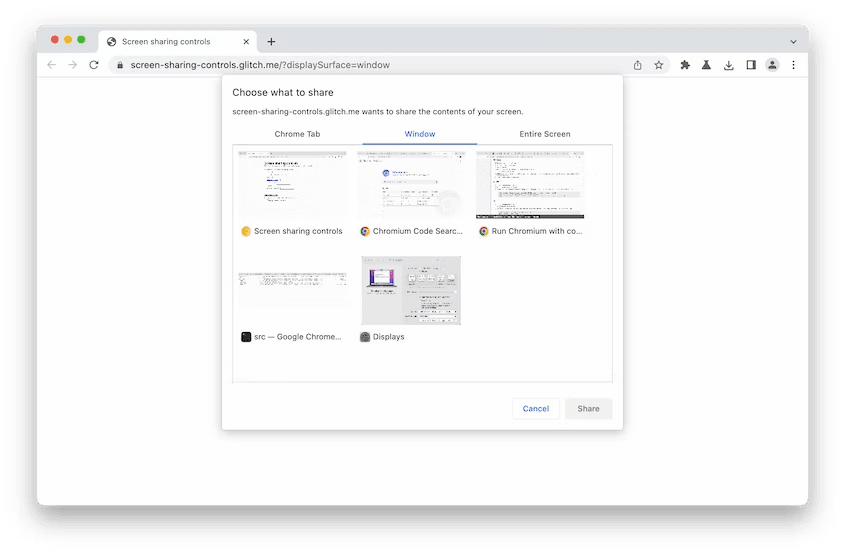

Screen Sharing Controls API

The Screen Sharing Controls API provides a way to customize the UI for screen sharing. You can use this API to create your own custom screen sharing controls or to modify the default controls provided by the browser. Here’s an example of how to use the Screen Sharing Controls API:

displaySurface

Web apps with specialized user journeys, which work best with sharing a window or a screen, can still ask Chrome to offer windows or screens more prominently in the media picker. The ordering of the offer remains unchanged, but the relevant pane is pre-selected.

The values for the displaySurface option are:

-

"browser"for tabs. -

"window"for windows. -

"monitor"for screens.

const stream = await navigator.mediaDevices.getDisplayMedia({

// Pre-select the "Window" pane in the media picker.

video: { displaySurface: "window" },

});

surfaceSwitching

If [surfaceSwitching](https://w3c.github.io/mediacapture-screen-share/#dom-displaymediastreamoptions-surfaceswitching) is set to "include", the browser will expose said button. If set to "exclude", it will refrain from showing the user that button.

const stream = await navigator.mediaDevices.getDisplayMedia({

video: true,

// Ask Chrome to expose browser-level UX elements that allow

// the user to switch the underlying track at any time,

// initiated by the user and without prior action by the web app.

surfaceSwitching: "include"

});

selfBrowserSurface

To protect users from themselves, video conferencing web apps can now set [selfBrowserSurface](https://w3c.github.io/mediacapture-screen-share/#dom-displaymediastreamoptions-selfbrowsersurface) to "exclude". Chrome will then exclude the current tab from the list of tabs offered to the user. To include it, set it to "include"

const stream = await navigator.mediaDevices.getDisplayMedia({

video: true,

selfBrowserSurface: "exclude" // Avoid 🦶🔫.

});

Conditional Focus API

The Conditional Focus API provides a way to capture only the active window or application, rather than capturing the entire screen or a region of the screen. This can be useful if you want to capture the window the user is currently interacting with while ignoring other windows or the desktop. Here’s an example of how to use the Conditional Focus API:

First, create the controller:

const controller = new CaptureController();

// Prompt the user to share a tab, a window or a screen.

const stream =

await navigator.mediaDevices.getDisplayMedia({ controller });

const [track] = stream.getVideoTracks();

const displaySurface = track.getSettings().displaySurface;

Then you can conditionally set the focus behavior:

// Retain focus if capturing a tab dialed to example.com.

// Focus anything else.

const origin = track.getCaptureHandle().origin;

if (displaySurface == "browser" && origin == "

You can call setFocusBehavior() arbitrarily many times before the promise resolves, or at most once immediately after the promise resolves. The last invocation overrides all previous invocations.

More precisely:

- The

getDisplayMedia()returned promise resolves on a microtask. CallingsetFocusBehavior()after that microtask completes throws an error. - Calling

setFocusBehavior()more than a second after capture starts is no-op.

Conclusion

These lesser-known screen sharing APIs provide additional functionality and control that can be useful in certain situations. We hope this blog post has helped you discover new ways to implement screen sharing in your web applications. For more information on these APIs, check out the Chrome Developer Documentation.

We at Dyte love experimenting with new browser APIs and fun things to implement, if you see any of these screen sharing APIs being beneficial for your use case, we would love to help you implement them with Dyte!