Our ChatGPT website chatbot, AI Expert System and AI Website Search is based upon scraping your website. The technique is actually quite simple. However, how can we end up having so much higher quality than most others?

What we do is really simple; We scrape your website, then we store your website’s text as context snippets in a database. When users asks a question to our ChatGPT website chatbot, we use OpenAI’s embedding API to look for the most relevant context snippets, and attach this as a “context” to the question before we send the question to ChatGPT. This allows OpenAI to return an answer to your question using the supplied context as its foundation for its answer. Fundamentally you could argue the following.

We send the question AND the answer to ChatGPT, and ChatGPT compiles a functioning response answering the question with the answer we’ve already sent to it as a part of the request.

The end result is that ChatGPT can provide answer to questions it had no idea how to answer in its original state. You could argue that what we’re doing is “fancy prompt engineering based upon automation, AI-based semantic search, and database lookups”.

The above sounds simple, right? In fact, it is so simple that over the last few months thousands of developers and companies have done it. Since we originally invented this technique thousands of companies have copied us. Still, every time somebody contacts us for a quote, we hear the same over and over again.

I’ve tried dozens of your competitors, and you guys are simply the best!

Quality Website Scraping

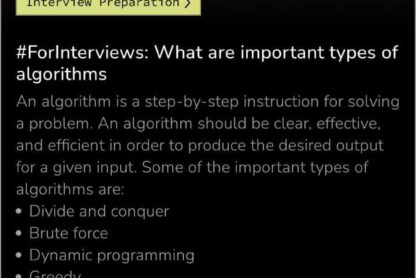

Everything relies upon having an amazing website scraper. This is where we are different, and quality website scraping is our unique selling point. To understand why, realise first that when you scrape your website you need to somehow create super high quality data. The reason is because you want ChatGPT to be able to “create associations”, such that the user can ask questions such as; “What’s the difference between product x and y”.

The quality of your website chatbot is never higher than the quality of your context data

If you just retrieve the HTML and store it in your database, associations becomes impossible, because of OpenAI’s maximum token count. In addition, you will add a lot of irrelevant HTML tags to your context, which ends up becoming noise to ChatGPT, preventing it from returning a high quality response. If your context snippets are too large, each question will only be able to use one or two training snippets as its context.

What you want is a lot of small context snippets, that our AI Search algorithms can retrieve easily, which describes one concept, and one concept only. This is counter intuitive for most developers since we’ve heard mantras of “big data” for decades, and we’ve been taught how machine learning relies upon large data sets – When the exact opposite is in fact true, implying the smaller data sets and context snippets you’ve got, the higher the quality becomes.

Super high quality AI is about SMALL data sets!

The way we solve this is by chopping up a single web page into multiple training context snippets during scraping, resulting in many small snippets, instead of one large. In addition we calculate the number of tokens each snippet consumes, and if the number is larger than 50% of the maximum context token count for the model’s configuration, we actually use ChatGPT to create a “summary” of the training snippet before we insert it into the database as context data. Needless to say of course, but we also obviously remove all HTML tags as we scrape your website.

How our Website Scraper chops up your Website

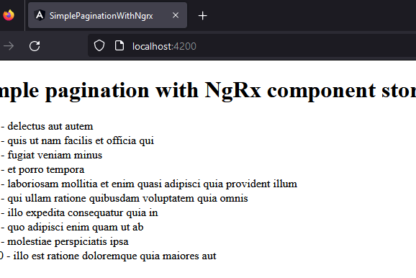

Our website scraper algorithm will chop up each page into multiple context snippets according to where its Hx tags are, and then create one context snippet for each Hx tag combined with all paragraphs below the Hx tag. In addition we will keep hyperlinks and images and create Markdown from these, allowing our chatbot technology to also display images and hyperlinks due to some intelligent prompt engineering trickery. This is why our chatbots can display images and hyperlinks, where most others can not. This is why a website with 200 web pages, ends up becoming 1,000 training snippets.

In addition to the above technique we also prevent inserts of the same training snippets twice. A typical website contains navigation elements and footer elements. These are often repeated in every single page in your site. If we inserted the same training snippet twice, any question that triggers these repeated elements will repeat the same context snippet multiple times, spending valuable OpenAI tokens, preventing ChatGPT from returning high quality responses.

Notice, our ChatGPT website scraping technology even allows for semantically traversing websites without a sitemap, and intelligently parse URLs from your HTML, if your site does not have a sitemap. Obviously it prefers sitemaps, and will prioritize using sitemaps if existing, but it will work even if your site does not have a sitemap. This is a similar process to what Google uses, and even though Google obviously have 25 years of experience doing this, I would argue our website scraper is probably almost as good as Google’s crawling technology.

For the record, you can see our website crawler in your web server’s logs, since we correctly identify it as a crawler. And yes, we for the most parts also respect robots.txt files – Although some additional work needs to be done here, and will be implemented in the future.

Periodic crawling

Our chatbot technology is built upon Hyperlambda. Hyperlambda makes it very easy to create scheduled tasks, that are executing periodically, given some repetition pattern. Once every day we will execute such a scheduled task, that crawls your site and checks for new URLs. Each new URL is then scraped, and context data created for it using the process described above.

This implies that if you add a new page or article to your website, within 24 hours our chatbots will be able to answer questions related to your article automatically, without any effort required from you. The background task is executed on a background thread, and is 100% async in nature, implying the server CPU costs of this process is almost zero. For the record, this isn’t even possible in theory using PHP due to its lack of multi threading …

Website Scraping Advanced Features

In addition to the above, we also allow for “spicing” models with individual pages, taken from for instance WikiPedia, CNBC, or “whatever” really. We use the same technique as illustrated above, except when spicing a model, we only retrieve the exact URL specified, and we don’t crawl the URL. You can also crawl multiple sites in the same model, but you can only have periodic crawling of one base URL. If you crawl multiple sites in the same model, you have to scrape one of your URLs once, and only have one URL being periodically re-scraped as new pages are added.

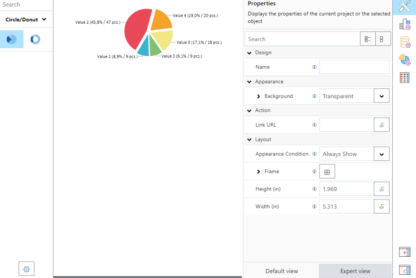

In addition to the above, you can also manually insert context data, and do any amount of CRUD towards your training data, including filtering, searching, ordering, creating, inserting, updating and deleting context data. Interestingly, this part of our technology was automatically created using Magic’s Low-Code features in seconds, wrapping the related database tables in CRUD endpoints 100% automatically. Magic is a Low-Code platform, allowing us to deliver features a “bajillion times faster” than everybody else, and we are taking advantage of that fact for obvious reasons.

Conclusion

I’ve seen “a bajillion” copy cat companies popping out from apparently “nowhere” these last months, trying to copy our technology, since it “seems so easy”. However, creating a chatbot that performs 50% is easy. Any schmuck with a PHP editor can do that – However, the difference is in the quality. Where “any schmuck with an IDE can deliver 50%, our stuff delivers 100%, almost automatically ‘out of the box'”.

For instance, PHP doesn’t even have support for background threads. How are you going to implement periodic crawling without background threads? Implementing the same quality as we’ve got is not even possible in theory using traditional programming languages such as PHP or Python.

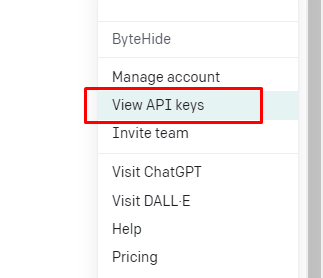

My suggestion to you is to instead of implementing this yourself, which you cannot do even in theory, is to instead rely upon the fact that we’ve got APIs for “everything”, allowing you to create your own ChatGPT chatbot frontend, and rely upon our API in the background. This would allow you to integrate a ChatGPT chatbot with for instance …

- Telegram

- iPhone SDK

- Android SDK

- “whatever” really …

WITHOUT having to reinvent the wheel, but instead rely upon our amazing ChatGPT website scraping technology. We also have a very lucrative partner program for those wanting to incrementally build on top of our stuff, and we’d love to help you market whatever you build on top of our stuff, since instead of becoming a competitor, you’d enter a symbiotic relationship with us if you chose to partner up with us 😊

Besides, not even Jasper can compare to our tech foundation. Even companies with dozens of employees, having existed for years, are pale in comparison to our tech and quality. This might sound incredibly arrogant, especially considering there are only 3 people working at AINIRO – But we’ve literally got the best ChatGPT website scraping technology on the planet! 😁