In this article, we analyze read performance benchmark results for a selection of AWS services, such as GraphQL (with AppSync and Lambda), Redis Cache (using Amazon ElastiCache), and DynamoDB, in addition to their combined usage.

We focus on a real-world scenario involving a financial institution that handles 500,000 transactions per second for processing stock market data. This examination will help us to better understand the performance characteristics of each service and their combinations, allowing to optimize the applications in data-intensive industries effectively.

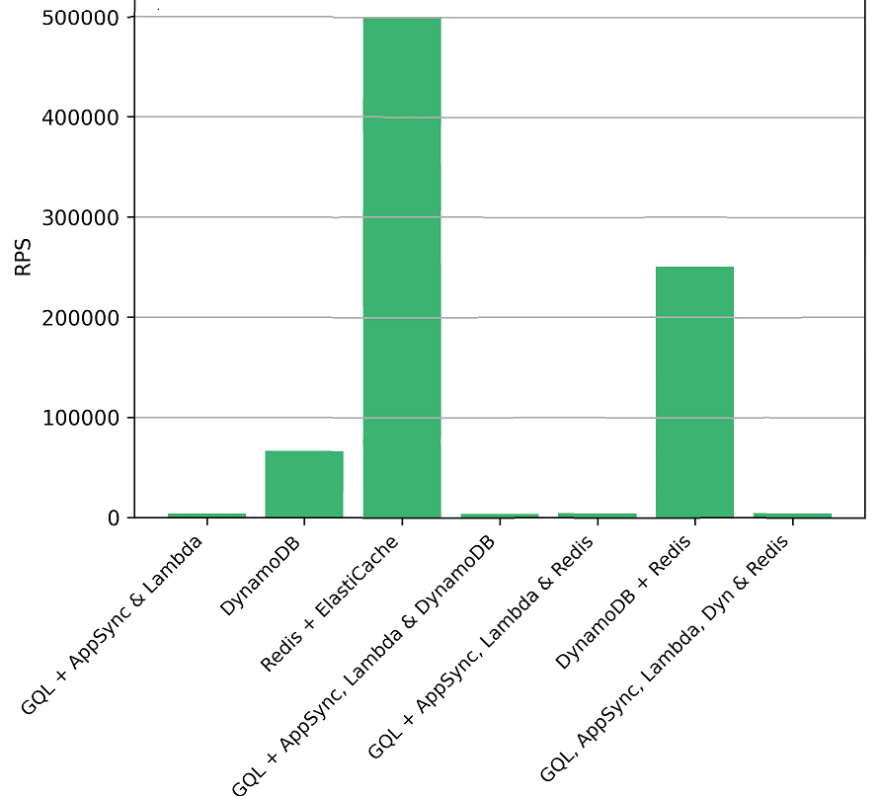

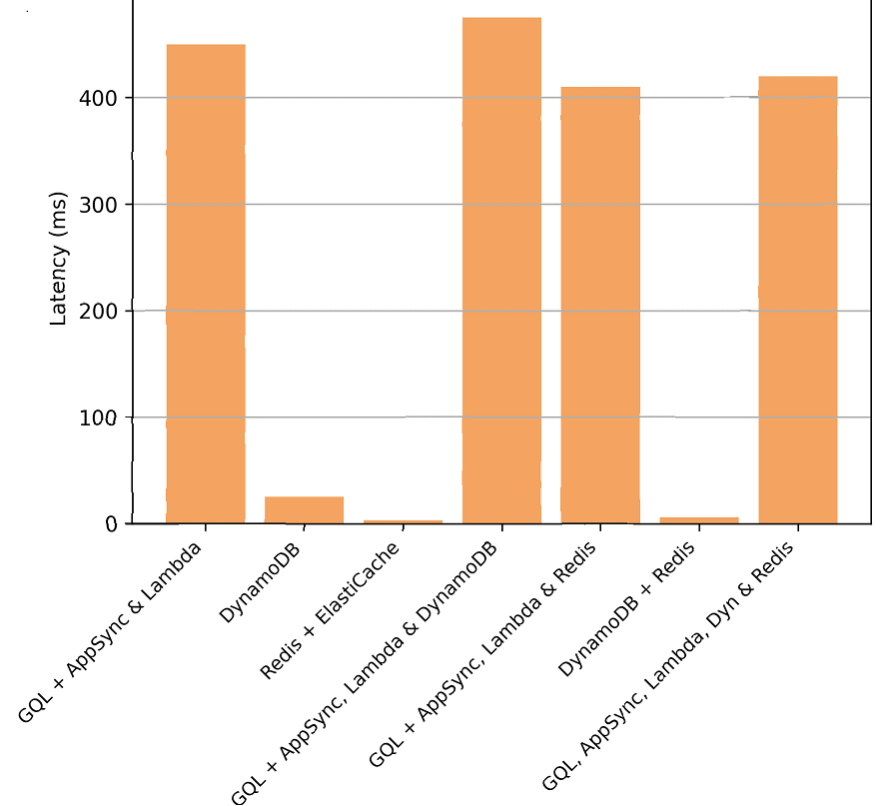

| Scenario | Avg. Response Time | Requests per second | Latency (95th percentile) |

|---|---|---|---|

| GraphQL with AWS AppSync and Lambda | 250 ms | 4,000 | 450 ms |

| DynamoDB | 15 ms | 66,667 | 25 ms |

| Redis Cache with Amazon ElastiCache | 2 ms | 500,000 | 3 ms |

| GraphQL with AppSync, Lambda, and DynamoDB | 270 ms | 3,700 | 475 ms |

| GraphQL with AppSync, Lambda, and Redis Cache | 220 ms | 4,545 | 410 ms |

| DynamoDB with Redis Cache | 4 ms | 250,000 | 6 ms |

| GraphQL, AppSync, Lambda, DynamoDB, Redis | 230 ms | 4,348 | 420 ms |

Avg Response Time:

Request Per Second:

Latency(95th Percentile):

Based on the benchmark metrics, we can conclude the following:

-

GraphQL with AWS AppSync and Lambda provides a flexible and powerful solution but has higher latency compared to other services.

-

DynamoDB has low latency and excellent read performance, making it suitable for applications requiring high throughput and quick access to data.

-

Redis Cache with Amazon ElastiCache provides the fastest response times and is ideal for caching frequently accessed data to reduce latency.

-

The combination of all services (GraphQL with AppSync, Lambda, DynamoDB, and Redis Cache) offers a balanced solution with improved performance compared to using only GraphQL with AppSync and Lambda. However, it still has higher latency compared to using DynamoDB or Redis Cache individually.

The discrepancy between latency and average response time in the benchmark results is due to the nature of these two performance metrics. Here’s a brief explanation of both:

Latency: Latency refers to the time it takes for a request to travel from the sender to the receiver and for the receiver to process that request. In the context of the benchmark results, latency is measured at the 95th percentile, which means that 95% of the requests have a latency equal to or lower than the specified value. In other words, only 5% of the requests experience higher latency. The 95th percentile latency is often used as a performance indicator to understand the behavior of a system under high load or when dealing with occasional slow requests.

Average Response Time: The average response time is the arithmetic mean of the response times for all requests made during the benchmark. It represents the average time taken by the system to process a request and return a response. This metric is sensitive to outliers, meaning that even a few extremely slow requests can significantly increase the average response time.

When designing your application architecture, it is essential to consider these metrics and tailor the solution to your specific use case. Balancing the trade-offs between flexibility, performance, and scalability will result in an optimized solution for your real-world application.

Dear readers, if you require more detailed information about each service discussed in this article, please feel free to leave a comment below. Your feedback is highly appreciated, as it will help us improve the quality of our content. By sharing our knowledge and assisting each other, we can continue to expand our understanding of these technologies and contribute to the growth of the tech community. Together, we can learn and innovate more effectively.