This is a little demonstration of how little you need to host your own git repositories and have a modest Continuous Integration system for them. All you need is a unixy server you can ssh into, but arguably you can try this out locally as well.

We will use Redis at one point to queue tasks, but strictly speaking this can be achieved without additional software. To keep things simple this will only work with one repository, since this is only describing a pattern.

The source code to all of that follows below can be found here.

Hosting bare git repositories

Assuming you can ssh into a server and create a directory, this is all you need to create a shareable git repository:

$ git init --bare

Ideally you are using a distinct user for it (named git) and have it set to use git-shell as its default shell. By convention bare repositories are stored in directories which end in .git. You can now clone this repository from your machine with:

$ git clone ssh://git@host.example.com/~git/repo.git

post-receive hooks

A post-receive hook is an executable which can do some work as soon as something new was pushed to the repository. We will use an executable shell script which needs to go inside the hooks directory of the (bare) repository on the server side.

Now the most trivial thing to do would be to do the actual work in here, but this would block the git push on the client side, so we just want to enqueue a new job, return a handle and exit. If what you do takes only a short amount of time, you can stop here. Alternatively you can use this repository for deployments only, by defining it as a separate remote. But the goal here is to have tests run on every push, so we will split the job creation from the actual run.

This is where Redis comes into play for the job queueing. We will assume redis is installed and running and we will use redis-cli to access it from the script. We will use two data structures: a list of jobs waiting to be executed, referenced by a UUID we will generate and a hash where we can store the git revision and the state associated to a given job, as well as its output.

Note that git is passing three arguments to the script via stdin: the old revision before the push, the new revision and the current ref.

#!/bin/bash

while read -r _ newrev ref

do

id=$(uuid)

echo "Starting CI job $id"

redis-cli hset "$id" rev "$newrev" >/dev/null

redis-cli hset "$id" ref "$ref" >/dev/null

redis-cli lpush jobs "$id" >/dev/null

done

Defining build jobs

By convention our system will run whatever is in an executable script named ci.sh. The drawback is that this only works with trusted systems and access to the repository needs to be guarded to prevent random code execution. The big advantage is that we don’t need to come up with a job definition DSL or cumbersome file format.

Our convention will also be that the script will be passed one argument: the name of the git ref, so we can decide what to do based on the branch we are on.

Let’s just put this into a file named ci.sh:

#!/usr/bin/env bash

# the git ref gets passed in as the only argument

ref="$1"

# pretend we're running tests

echo "running tests"

# only deploy if we're on the main branch

[[ "$ref" == "refs/heads/main" ]] && echo "Deploying"

The build runner

Now that jobs are queued the last piece missing is a job runner. We will make use of Redis’ BLPOP command to block until the jobs list has a new job for us. That job id will give us the revision we need to check out and will allow us to write back the output and status of the job.

Note that, as discussed, this assumes a repository called test is already checked out right next to the script.

tiny-ci.sh

#!/usr/bin/env bash

# ./runner.sh is supposed to run on the server where your git repository lives

# the logic in here will run in an infinite loop:

# * (block and) wait for a job

# * run it

while :

do

# Announce that we're waiting

echo "Job runner waiting"

# We are using https://redis.io/commands/blpop to block until we have a new

# message on the "jobs" list. We use `tail` to get the last line because the

# output of BLPOP is of the form "list-that-got-an-elementnelement"

jobid=$(redis-cli blpop jobs 0 | tail -n 1)

# The message we received will have the job uuid

echo "Running job $jobid"

# Get the git revision we're supposed to check out

rev=$(redis-cli hget "${jobid}" "rev")

echo Checking out revision "$rev"

# Get the git ref

ref=$(redis-cli hget "${jobid}" "ref")

# Prepare the repository (hardcoded path) by getting that commit

cd test || exit; git fetch && git reset --hard "$rev";

# Actually runs the job and saves the output

if ! output=$(./ci.sh "$ref" 2>&1);

then

status="failed";

else

status="success";

fi;

# Update the result status

redis-cli hset "${jobid}" "status" $status;

# Update the job output

redis-cli hset "${jobid}" "output" "$output";

echo "Job ${jobid} done"

done

Running it

Summing up:

- there’s a bare git repository somewhere, called

test.git - we can clone the empty repo (or create a new one and add the respective remote)

- on the server hosting the git repository we clone

test.gitintotestand placetiny-ci.shnext to it - we run builds by starting

tiny-ci.shon the server hosting the repository

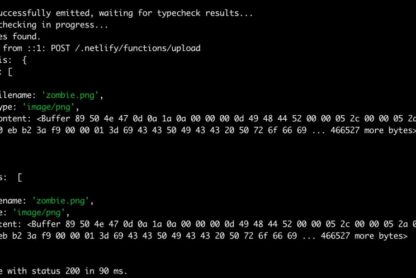

Now if we git push a new commit to the main branch with the ci.sh file from above, the output will return the job id

Enumerating objects: 5, done.

...

remote: Starting CI job dab82634-21cc-11eb-b3b3-9b8767dff47c

Checking build status

Knowing a job uuid, the easiest way to get the status

of a build is by using the --csv style output of the HGETALL command of redis.

$ ssh example.com redis-cli --csv hgetall $JOB_UUID

"rev","f0706ea18a22031f84619b1161c8fbdb0dcd6850","ref","refs/heads/master","status","success","output","running testsnDeploying"

Possible further improvements

This would mean changes to the post-receive hook to put jobs in a list named job-${REPONAME} and then have the worker also react based on that. Notice how redis-cli blpop takes several lists to watch and will also return the name of the list.

Creating a key for every job pollutes the redis database unnecesarily. Enqueuing the job could be done via SETEX so that the keys go away after one hour / one day / one week. The purpose of Redis here is short term storage and not long-term archival of job results

Scaling to multiple workers on the same machine would need different working folders (and some process isolation depending on the tasks run in there). Scaling to multiple machines would need access to a central redis instance for job distribution.

- worker isolation / sandboxing

For more complex tasks some kind of process and file-system isolation is necessary. The worker could spin up VMs or Docker containers. The build system used on builds.sr.ht for instance uses a Docker container run as an unprivileged user in a KVM qemu machine.

For convenience you would definitely want timestamps for every operation. This also allows to list queries like “the last five jobs” or to do maintenance on job results based on their time.

Any CI system will have some form of notifications and the simplest form would be to do something in the script, right at the end. But this covers only the success case, so a better approach would be to create a notification queue and have a notification worker react on that.